What’s wrong with Deep Research for GTM teams

And how you can build / tweak Deep Research Agent to source prospects and grow accounts.

If you were forwarded this newsletter, consider joining over 250 weekly readers—some of the most competent GTM devs / analysts—by subscribing here:

“Deep Research” has become one of my favorite tools, even before officially being branded across the major LLM platforms.

This all started back in 2022–2023. That’s when early AI power users began building “deep research” stacks to read 10-Ks, parse blog posts, listen to podcasts, and pull in CRM notes.

You then needed a Retrieval-Augmented Generation (RAG) pipeline or a notebook-style UI. The infrastructure was costly and clunky. Over time, these capabilities became cheaper and better integrated, first via LLM notebooks, and eventually through native assistant chat interfaces.

Today, what used to be called “custom research workflows” is now rebranded as Deep Research.

Google unveiled its Gemini 1.5 Deep Research in December 2024, OpenAI followed with its Deep Research in February 2025, and Perplexity introduced its own Deep Research shortly after. Meanwhile, DeepSeek, Alibaba’s Qwen, and Grok rolled out Search and Deep Search features for their chatbot assistants. Alongside these, dozens of copycat open-source implementations of Deep Research have popped up on GitHub.

But despite all the hype, Deep Research has real limitations, especially when applied to business use cases.

In this post, I’ll explore where today’s deep research falls short and why the future lies in vertical AI, a tool purpose-built to support specific workflows for GTM teams.

What is Deep Research

At a high level, Deep Research tools work like autonomous AI agents. Think of an agent as a self-directed digital researcher: it plans, executes, and refines a multi-step process to accomplish a goal.

This means the AI agent uses an LLM to figure out its research strategy, does a web search to find stuff, and then uses the LLM again to make sense of what it found. It keeps going through this cycle of:

A typical Deep Research agent follows this loop:

Planning

The agent decomposes the query, defines subgoals, and decides what to do next (e.g., run a web search, open a PDF, analyze a page).Acting

It uses tools—like a headless browser or API—to retrieve information and synthesize relevant insights.Observing

It evaluates whether the goal is met. If not, it loops back to planning and continues iterating.Publish

Once done, it generates a cohesive report by weaving together structured outputs.

This is often called an agentic loop, miming the mental model of an actual researcher “spinning” through a problem.

In most early agent systems (and even in tools like LangChain), the execution flow resembled a Directed Acyclic Graph (DAG) — meaning:

Each step in the process is predefined

It moves in one direction

There’s no looping or stateful branching

Decisions are made outside the model by an orchestrator

This worked well for deterministic pipelines, like:

"user → query → retrieval → response".

The ingestion of reasoning capabilities into LLMs was the pivotal moment that unlocked the second wave of agents.

The early wave of LLMs (GPT-3 era) could generate, but not really reason. They lacked structured memory, planning, or multi-step coherence. Agents built on them were primarily prompt chains or glorified macros.

But around late 2023 to 2024, we saw:

Toolformer & ReAct patterns formalized

Tree of Thought, Program-Aided LLMs, and Chain of Thought emerged

OpenAI’s function calling, Anthropic’s tool use, and Claude 3’s strong reasoning began to show real promise

LLMs became not just good at text but also good at thinking through text—structured, stateful, and iterative.

Now, with reasoning-aware LLMs, we see something more like a Finite State Machine (FSM):

The model remembers its state (implicitly or explicitly)

Transitions between states (e.g., "plan", "execute", "reflect") are influenced by the model's reasoning

There are loops, retries, and branching logic

The agent is no longer just a consumer of structure — it helps guide it

So it’s not just "step 1 → step 2 → done", but

"step 1 → evaluate → maybe loop → branch → step 3 → done"

That’s what I meant by the transition: agents moved from static, hardcoded execution (DAG) to dynamic, adaptive reasoning flows (FSM-like behavior) — where LLMs control the flow.

It is the engine that drives progress toward the goal. Each stage has a purpose. In this agentic loop's center is an LLM that can reason and use tools (via methods like function calling).

What powers Deep Research agents

Here’s a simplified breakdown of the stack:

Instruction Layer

A system prompt defines goals, constraints, and strategies (e.g., “Research stock movement on cnbc.com”).

Tools

Agents use calculators, browsers, scrapers, summarizers, and other APIs to gather and transform data.

OpenAI’s Deep Research agent is likely boosted by its headless browser, recently introduced via Operator.

Environment (optional)

The infrastructure the agent runs on—hardware, network, security boundaries, etc.—affects speed and access.

Guardrails (optional)

Policies or filters that block undesirable behavior (e.g., generating harmful content, accessing restricted domains).

The problem: benchmarks are misaligned

Despite all the advances, today’s Deep Research tools are optimized for academic tasks, not business use cases.

Benchmarks like GAIA and PaperBench measure how well an AI can summarize scientific papers or reason through multi-hop logic. These are useful for AI research but don’t reflect what go-to-market teams need.

What if I’m a manufacturing company trying to analyze which lidar sensors Tesla uses? What if I want to identify the Q1 revenue of a niche SaaS firm across 3 different investor presentations?

These aren’t “IQ tests” for a general-purpose model. They’re high-stakes, real-world queries that impact revenue and GTM strategy.

However, we currently do not have a standardized way to measure deep research agents based on business relevance, speed, or actionability.

As Ben Evans put it when critiquing low-quality sources:

“Statista, which is basically an SEO spam house that aggregates other people’s data. Saying ‘Source: Statista’ is kind of like saying ‘Source: Google Search.’”

We don’t need more generic answers. We need domain-specific tools that can reason through noise and surface signal.

I’m especially bullish on two applications of deep research agents—one for prospecting & list-building, and another for slicing through CRM noise to uncover the highest-value nurture plays in existing accounts.

Better Deep Research for Prospecting

For this piece, I've asked Dima to discuss the feasibility of specialized solutions. He's developing a verticalized, deep research solution for business sourcing, called Extruct.

Contrary to horizontal Deep Research tools, Extruct AI uses "problem structure" (e.g., knowing that companies can be assessed independently; different questions, too) and can present deep research as a table, where each cell gets its own AI agent instance. As a result, such research will cumulatively use a 100x context window and tool calls, promising better results quality and deeper insights.

In a verticalized deep research, we can tweak an original architecture with some adjustments that can be made in the planning stage. Instead of merely iterating through a list of reports, we must systematically approach each company as an individual entity.

For instance, Extruct introduces a clever twist: it breaks down research into a structured table, where every cell is processed by its dedicated AI agent. This makes it easy to dive deeper and add extra data points after the initial research.

Extruct boasts phenomenal results with low online visibility, achieving 90% accuracy versus Perplexity’s 67.5% for niche segments like plywood manufacturers. Have a try!

Deep Research inside your CRM

A new breed of tools is weaving deep research into CRM and web platforms in parallel with standalone agent stacks.

Vendors like Actively.ai, CommonRoom.io, Rox, Tome.app have applied multi-step reasoning across both private deal data and external sources—10-Ks, blog posts, support transcripts—so that insights surface in the same interface reps use every day. The tools are claimed to be GTM Superintelligence or sales Second Brain is a form that continuously learning from closed-won patterns to optimize revenue outcomes rather than merely automating workflows.

CommonRoom.io focuses on “community intelligence,” ingesting signals from Slack, Discord, customer forums, newsletters and support tickets, mapping engagement and sentiment to account health scores inside Salesforce or HubSpot.

Rox deploys “agent swarms” that plan and prioritize by merging external feeds (news, social, public filings) with internal CRM records, then drive personalized emails and LinkedIn messages, and finally send daily digests, pre-meeting briefs, follow-ups and even CRM updates—all without leaving your data warehouse.

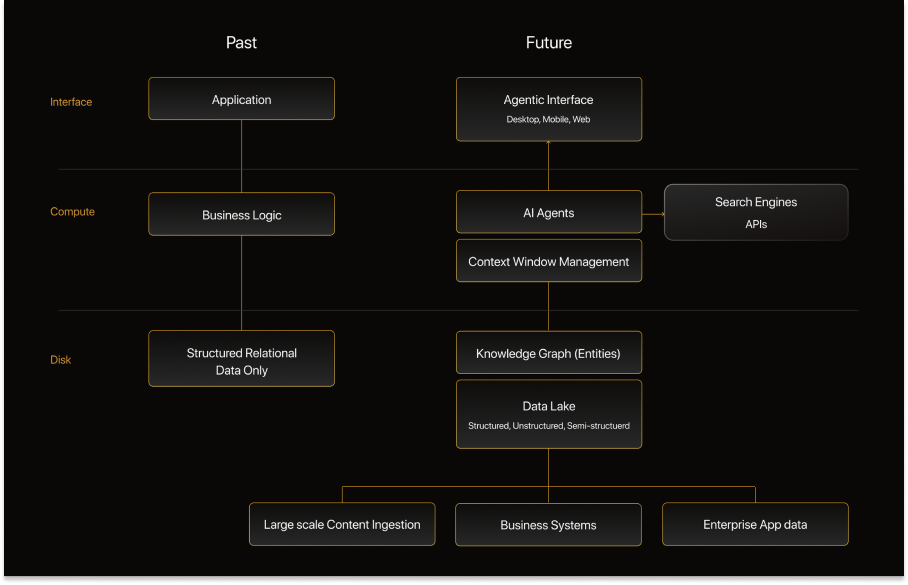

The Revenue Stack of the Past versus the Future according to Rox

Tome.app acts as a “second brain” for sales: trained on your playbook and CRM data, it pinpoints high-value accounts, maps buying committees, generates tailored account overviews and financial summaries, and even auto-builds meeting prep decks and discovery question scripts

What unites them is the shift from “more input = more output” to “reasoning over noise”—capturing institutional knowledge, surfacing only the accounts that matter, and embedding deep research into the workflows GTM teams use every day.

What excites me most is how a single deep-research layer can now power both ends of your funnel—spinning up highly targeted prospect lists and, in the same interface, cutting through CRM clutter to surface expansion, churn-risk, and nurture plays.

You prompt your agent (“find me mid-market SaaS companies raising Seed with immigrant founders”), and it delivers a scored list ready for outreach. Then, when deals land in your pipeline, that same agent lives in your CRM to flag untapped upsell opportunities and churn signals based on your custom criteria.

Sounds like a future present of the GTM today.

How are you blending prospecting and account research in your GTM stack today?